Platform Network Protection Guide

#Cloudflare Overview

We recommend using Cloudflare to shield your platform since it provides various security and performance enhancements out of the box without interfering with the deployment process.

Cloudflare provides traffic protection by proxying all the incoming traffic, though you can enable enhanced security measures in the case of a DDOS attack.

Ongoing Attack Mitigation

If you're encountering a DDOS attack, perform the following steps:

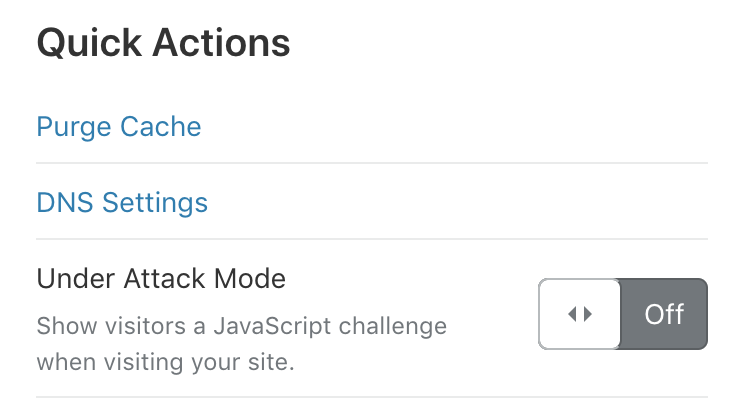

- Sign in to Cloudflare and enable Under Attack mode for your domain

2. Open your load balancer logs(Ingress Controller/Traefik/etc.) and check for IPs that occur the most

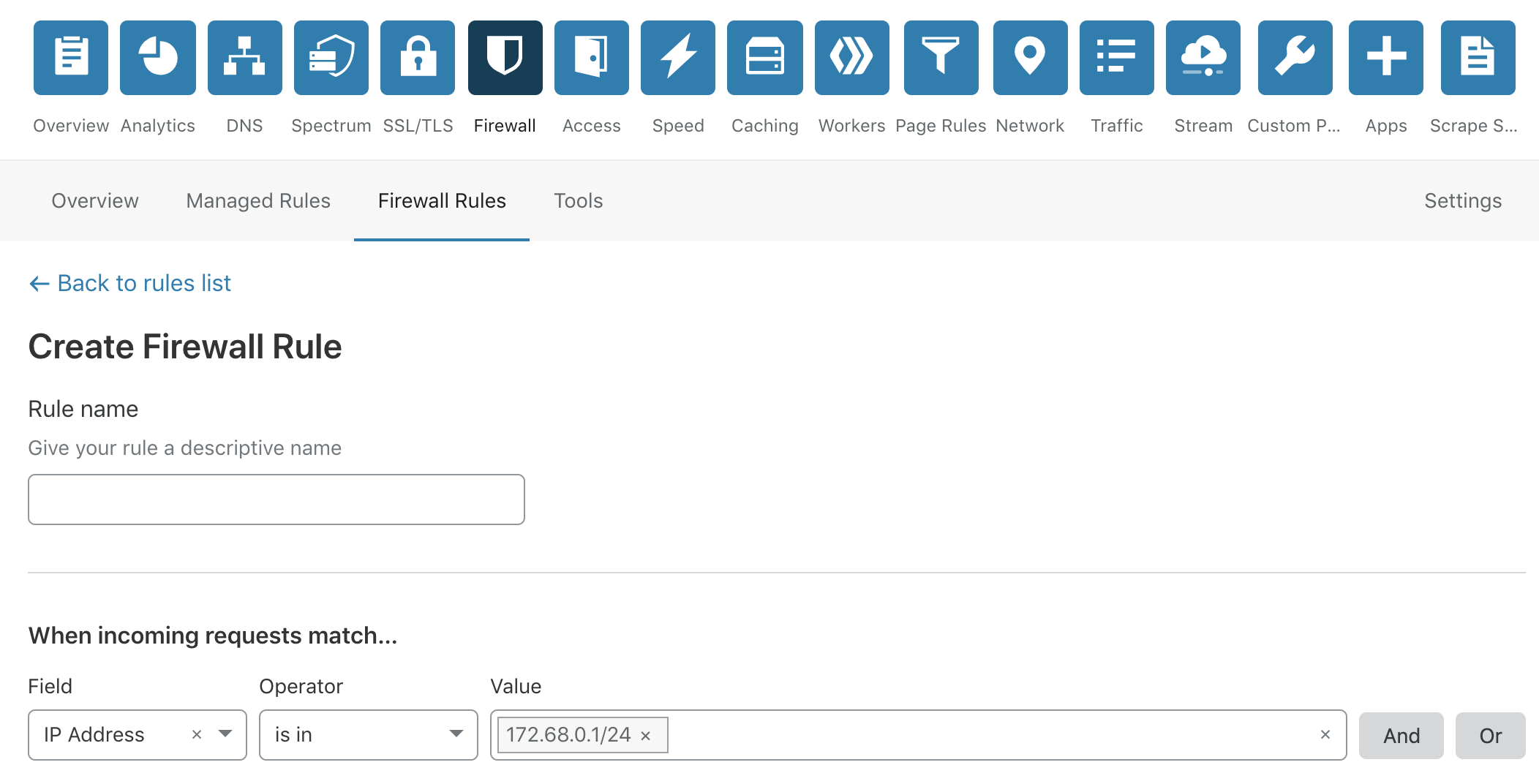

3. Go to your Cloudflare domain and enable Firewall>Firewall Rules

4. Click on Create a Firewall rule and add all the suspicious IPs

2. Open your load balancer logs(Ingress Controller/Traefik/etc.) and check for IPs that occur the most

3. Go to your Cloudflare domain and enable Firewall>Firewall Rules

4. Click on Create a Firewall rule and add all the suspicious IPs

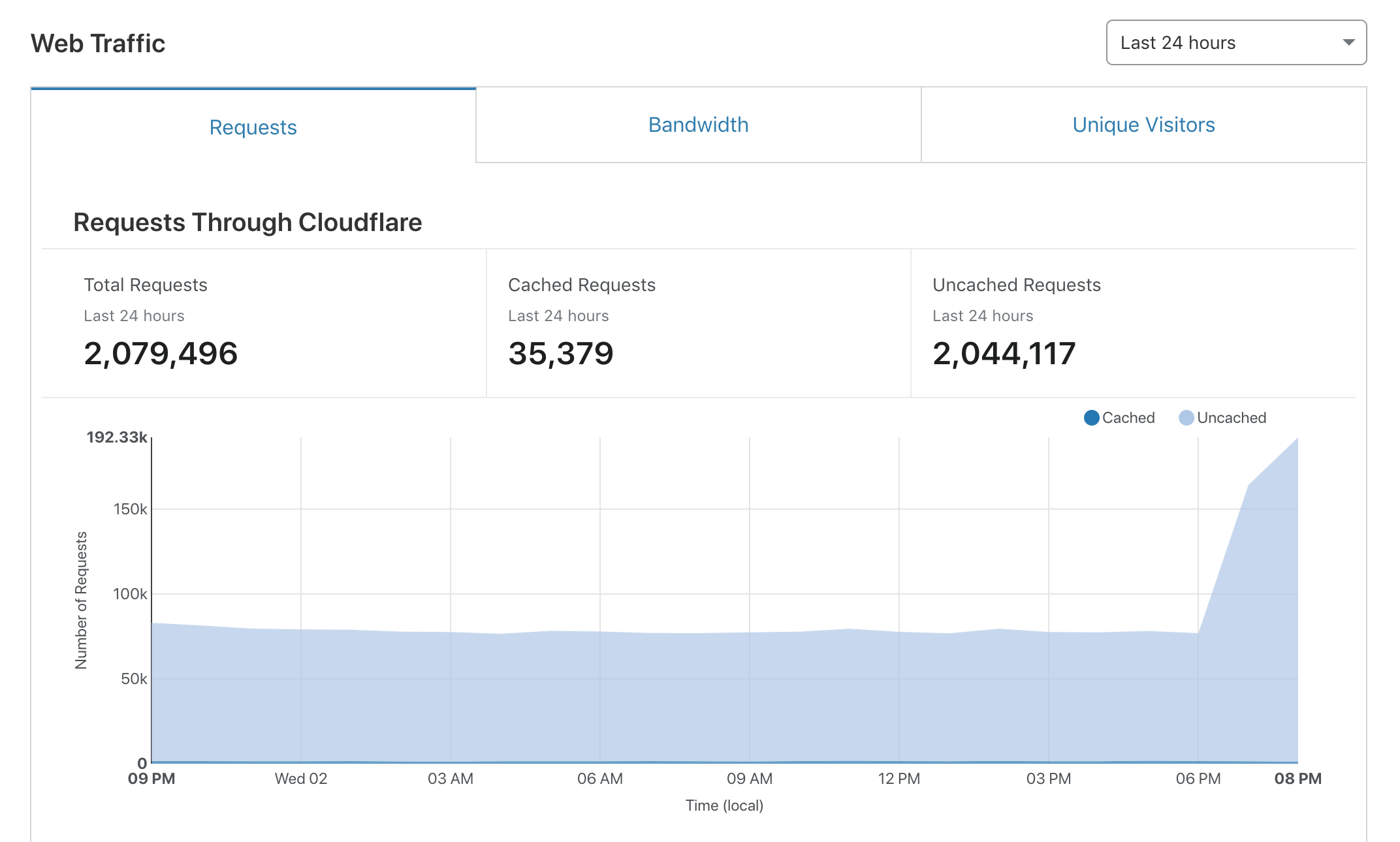

5. Observe the incoming traffic on the Analytics page and monitor load balancer logs to see whether there still are suspicious IP addresses sending requests to the platform

5. Observe the incoming traffic on the Analytics page and monitor load balancer logs to see whether there still are suspicious IP addresses sending requests to the platform

6. Disable Under Attack mode after the attack is mitigated

6. Disable Under Attack mode after the attack is mitigated

#Kubernetes Nginx Ingress Rate Limiting

You can add the following annotations to your envoy Ingress to define limits on API gateway connections and transmission rates:

nginx.ingress.kubernetes.io/limit-connections: number of concurrent connections allowed from a single IP address. A 503 error is returned after this limit is exceeded.nginx.ingress.kubernetes.io/limit-rps: number of requests accepted from a given IP each second. The burst limit is set to this limit multiplied by the burst multiplier, the default multiplier is 5. When this limit is exceeded, limit-req-status-code default: 503 is returned.nginx.ingress.kubernetes.io/limit-rpm: number of requests accepted from a given IP each minute. The burst limit is set to this limit multiplied by the burst multiplier, the default multiplier is 5. When clients exceed this limit, limit-req-status-code default: 503 is returned.nginx.ingress.kubernetes.io/limit-burst-multiplier: multiplier for rate limit bursts; this could be useful for cases when clients send bursts of requests during a short interval of time(<1000 ms), with limit bursting the server would still accept up to limit-rps * limit-burst-multiplier requests. The default value is 5, 503 is returned after the limit is exceeded.nginx.ingress.kubernetes.io/limit-rate-after: initial number of kilobytes after which the further transmission of a response to a given connection will be rate limited. This feature must be used with proxy-buffering enabled.nginx.ingress.kubernetes.io/limit-rate: number of kilobytes per second allowed to send to a given connection. The zero value disables rate limiting. This feature must be used with proxy-buffering enabled.nginx.ingress.kubernetes.io/limit-whitelist: client IP source ranges to be excluded from rate-limiting. The value is a comma separated list of CIDRs.

If you specify multiple annotations in a single Ingress rule, limits are applied in the order limit-connections, limit-rpm, limit-rps.

To configure settings globally for all Ingress rules, the limit-rate-after and limit-rate values may be set in the NGINX ConfigMap(you can find its name by running kubectl describe po nginx-ingress-*** and looking for the --configmap option). The value set in an Ingress annotation will override the global setting.

If you'd like to apply rate limiting on specific endpoints, you can use separate Ingress resources for it. All you have to do is specify needed paths in spec section:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: envoy-rate-limit-markets

annotations:

nginx.ingress.kubernetes.io/limit-rps: 300

...

spec:

rules:

- host: www.opendax.com

http:

paths:

- backend:

serviceName: envoy

servicePort: 10000

path: /api/v2/finex/market/orders

- backend:

serviceName: envoy

servicePort: 10000

path: /api/v2/peatio/public/markets

#Docker-Compose deployment protection using Traefik Rate Limiting

Traefik allows for rate limiting per Compose service via Docker labels.

Configuration Options

average- is the maximum rate, by default in requests by second, allowed for the given source.period, in combination withaverage, defines the actual maximum rate, such as:r = average / periodIt defaults to 1 second.

burst- maximum number of requests allowed to go through in the same arbitrarily small period of time.

Configuration example

version: '3.6'

services:

gateway:

restart: always

image: envoyproxy/envoy:v1.10.0

volumes:

- ../config/gateway:/etc/envoy/

command: /usr/local/bin/envoy -l info -c /etc/envoy/envoy.yaml

labels:

- "traefik.http.routers.gateway-opendax.rule=Host(`www.app.local`) && PathPrefix(`/api`,`/admin`,`/assets/`)"

- "traefik.enable=true"

- "traefik.http.services.gateway-opendax.loadbalancer.server.port=8099"

- "traefik.http.routers.gateway-opendax.entrypoints=web"

# Rate limiting

- "traefik.http.middlewares.gateway-opendax.ratelimit.average=100"

- "traefik.http.middlewares.gateway-opendax.ratelimit.period=1m"

- "traefik.http.middlewares.gateway-opendax.ratelimit.burst=50"